A Technical Review Of Nanosystems

By Jacob Rintamaki

UPDATES:

(May 13th, 2024) Wow it's been a while since I've worked on this particular aspect. I will be updating this review (going through revisions), since I think that by the end of the summer I will be able to have a very detailed, in-depth approach (which will be necessary for convincing more people that this stuff could actually work), however most of my effort is spent on nanotechnology experiments. I am aware that many of the links are broken, I am working on fixing that. -Jacob

tl;dr

The math of Nanosystems seems to work enough to be interesting (even if its not as fast/strong/etc. as the book suggests), yet only a handful of relevant physical experiments have been published.

I’m going to go do more of these relevant physical experiments and put the results on arXiv.

My first experimental goal is to recreate this paper (where the authors are able to remove and deposit Si atoms on a Si(111)-7x7 surface using an NC-AFM at 78K under UHV conditions).

I would ideally like to have recreated this experiment by June 20th, 2024 (3 months from the publication of this review, and the summer solstice!), but that's very, very, very, very ambitious.

However, sometimes very, very, very, very ambitious timelines can enable us to achieve the near-impossible.

If you think you could help me achieve this very, very, very, very ambitious goal (via advice, connections, equipment, money, etc.) please email me at jrin@stanford.edu or DM me on Twitter/X at @jacobrintamaki.

Back to work now.

Table Of Contents

Volume 1: The First

Motivation

Drama

Technical Progress

Nanosystems Preface

Chapter 1: Introduction and Overview

Chapter 2: Classical Magnitudes and Scaling Laws

Chapter 3: Potential Energy Surfaces

Volume 2: Back From The Desert

Chapter 4: Molecular Dynamics

Chapter 5: Positional Uncertainty

What Other Techniques Besides AFMs Are Interesting?

Additional Ideas I've Discussed

Responses To Emails That Don't Fit Anywhere Else

Volume 3->N: Coming soon!

Chapter 6: Transitions, Errors, and Damage

Chapter 7: Energy Dissipation

Chapter 8: Mechanosynthesis

Chapter 9: Nanoscale Structural Components

Chapter 10: Mobile Interfaces and Moving Parts

Chapter 11: Intermediate Subsystems

Chapter 12: Nanomechanical Computational Systems

Chapter 13: Molecular Sorting, Processing, and Assembly

Chapter 14: Molecular Manufacturing Systems

Chapter 15: Macromolecular Engineering

Chapter 16: Paths to Molecular Manufacturing

Appendix A: Methodological Issues in Theoretical Applied Science

Appendix B: Related Research

Experimental Section

Experimental Plan

Volume 1: The First

Motivation

When you hear the word "nanotechnology," what do you think of?

To many materials scientists, the word nanotechnology conjures ideas of carbon nanotubes and ever-more-advanced semiconductor fabrication plants trying to cram billions of transistors onto AI chips.

To some science fiction fans, nanotechnology looks more like a swarm of self-replicating robots that devour everything—a powerful predator.

But to Eric Drexler, the author of Nanosystems, nanotechnology doesn't look like carbon nanotubes or killer robots but rather a super-fast 3D printer that could make many non-edible physical products atom by atom.

Drexler calls this vision of nanotechnology APM (atomically precise manufacturing), and if it were invented, it would be one of the most important general-purpose technologies ever.

Here's an example: if APM worked according to Drexler's calculations, then APM could produce non-edible physical products ten times faster than traditional manufacturing while also having the prices be ten times cheaper. Even better, the product would be of ten times higher quality than its original, typically manufactured version, while also being more sustainable than practically every other manufacturing process we have today. Finally, to make things even more absurd, it's likely that the "ten times" metrics I mentioned earlier are underrating the full potential of APM.

Let me repeat that again: it's likely that the "ten times" metrics I mentioned earlier are UNDERRATING the full potential of APM.

That's incredible, but we've only scratched the surface of what APM can do.

In stories and research papers written by futurists such as Ray Kurzweil, Robin Hanson, Neal Stephenson, Eliezer Yudkowsky, Nick Bostrom, and others, APM could bring about an age of abundant wealth and scientific knowledge, allowing us to cure diseases, build ultra-powerful computers, and harness the full power of our sun for energy. However, some of these futurists warn of how APM could be utilized (particularly by advanced AI systems) to wipe out all of humanity, but luckily I don't think we have to worry about APM wiping out humanity.

Yet, taking a step back, despite all of this attention from prominent tech futurists, there's surprisingly been very little technical content written about the true feasibility of APM in recent years, and what has been written is mostly of low quality. To make matters worse, there have also been almost no physical experiments with the goal of validating APM published in recent years.

Now, this doesn’t mean that APM is impossible, since the theoretical foundations for computers and rockets were developed many decades before practical versions of either were built, but there’s still a lot of work left that has to be done to achieve APM.

So then, let’s get started; we don’t have a moment (or atom) to waste.

Drama

A common criticism of Nanosystems is that, despite being visionary, there’s been very little experimental progress towards APM since it was published in 1992.

(Now, this doesn't inherently mean that the technology is bunk, since both computers (Babbage, Lovelace) and rockets (Tsiolkovsky) had their theoretical foundations somewhat established decades before working versions were built, but it does inspire skepticism in me about the feasibility of APM.)

The next section of this review covers the last 30 years of technical progress (it’s mixed) towards APM, but there was a large social element that derailed Drexler’s vision.

This section focuses on that social element, which started in 1986, when Drexler published the popular science book "Engines of Creation" that he wrote to get the public excited about APM. In this book, Drexler brings up the now-infamous idea of “Grey Goo,” a catastrophe where nanotechnological replicators would exponentially self-replicate, consuming the entire Earth in the process.

Drexler then discredited the idea immediately after, claiming that, while not impossible, it was inefficient, along with other reasons, but it was too late.

In the year 2000, Wired magazine published an article by Bill Joy, a cofounder of Sun Microsystems, that warned of the existential dangers of nanotechnology, along with robotics and genetic engineering, because they could self-replicate.

Robert Freitas, a close collaborator of Drexler, then wrote a paper entitled “Some Limits to Global Ecophagy", which disavowed the idea of a surprise takeover from Grey Goo. (Freitas argues that these “Grey Goo” incidents are still possible, just that they would be easily detectable and preventable due to the large amount of heat they would generate.)

However, this paper wasn’t enough, and the public’s fear of Grey Goo led Nobel Chemist Richard Smalley, famous for his discovery of Buckminsterfullerene (also known as "Buckyballs"), to write the article “Of Chemistry, Love, and Nanobots" for the September 2001 edition of Scientific American. In this article, which started the two-year series of back-and-forth correspondences known as the "Drexler Smalley Debate", Smalley argued that Drexler’s visions of nanotechnology were flawed for two reasons: sticky fingers and fat fingers. Sticky Fingers refers to Smalley’s claim that atoms will stick to the manipulator’s atom and thus mechanosynthesis is impossible, and Fat Fingers refers to the claim that there isn’t enough room for the 10 or so atoms required to do mechanosynthesis. Drexler rebutted the sticky fingers claim by citing the multiple experimental papers that have shown atom-by-atom placement as possible, and the fat fingers claim by saying that mechanosynthesis doesn’t require those 10 atoms in such a small space because that would be impossible, but rather just three or so (example of a CO-functionalized tip on an AFM). Drexler then wrote two additional letters (one in April due to his concerns about Smalley slandering his work and one in June calling Smalley out for not responding to him) to Smalley. Drexler and Smalley’s final debate was in 2003 with a “Point-Counterpoint” feature in Chemical & Engineering News (C&EN), where Smalley wrote that Drexler’s vision of grey goo was scaring the children while Drexler advocated for more systems research to develop nanotechnology.

Although I believe that Drexler won the debate over Smalley, the funding for the newly founded National Nanotechnology Institute (NNI) went almost entirely to Smalley’s vision of nanotechnology, which focused more on chemistry done at small scales than Drexler’s vision of atomically precise nanomachines. Drexler’s 2007 Productive Nanosystems roadmap was also not funded, leaving Drexler’s vision seemingly defeated.

I would like to note that the loss of scientific momentum in the field of APM was arguably more important than the lack of funding, as APM was derided for many years as pseudoscience. As an example of this derision, Drexler’s 2013 book “Radical Abundance” was reviewed by C&EN (a popular chemistry journal) with the following title: “Delusions of Grandeur: [The] author insists ‘atomically precise manufacturing’ will transform civilization, but he’s not dealing with reality.”

Technical Progress

There are two main ways that APM could work: either one could have proteins self-assemble in a liquid into enzyme-like molecular machines, or one could have scanning probe microscopes (SPMs) pick up and place down atoms in a cold vacuum environment to make stiff molecular machines.

The first approach has been extensively covered by Adam Marblestone in his 85-page bottleneck analysis of positional chemistry as well as Richard Jones in his book "Soft Machines." The author would like to note that Soft Machines also makes criticisms of APM that are already very rigorously addressed in nanosystems, primarily around concerns of positional uncertainty, the feasibility of mechanosynthesis, and error correction mechanisms, but the rest of the book is an interesting read.

I will be covering the second approach for the rest of this review, due to that being the final form of APM. This is not to say that the soft form of APM could not be valuable for bootstrapping up to the SPM form of APM, but it is not my focus and not what I will be running experiments on.

Now, this particular section will focus on two questions, with the first being “What are the big technical milestones required for APM?” and the second being, “So then, what work has been done for vacuum and SPM-based mechanosynthesis in APM in the last 30 or so years?”

This section will not focus on the question of “so how do we accomplish the remaining big technical milestones required for APM?” because that will be addressed in the next section, Experimentation Plan.

Finally, before answering either question, I would like to make a note that one cannot physically buy an SPM, as they are a family of microscopy techniques. Scanning Transmission Microscopy (STM) and Atomic Force Microscopy (AFM) are examples of physical microscopes you can buy, and both are in the SPM family. We will be focusing on AFMs instead of STMs because AFMs work on any type of surface, even if the surface isn’t conducting or semiconducting, but both types of SPM are interesting. (If the reader wants to read up more on how AFMs and STMs work, this 2021 Nature Review of SPMs (https://www.nature.com/articles/s43586-021-00033-2) does a great job.)

Now, to answer the first question, I would look at the development of APM in five steps:

1. Mechanosynthesis (of any kind)

2. Making a 3D structure via mechanosynthesis

3. Electrical Motors

4. Assemblers

5. The First Macroscopic Object

The first step of mechanosynthesis (of any kind; it doesn’t need to be super fancy, just using mechanical means to drive chemical reactions in precise ways) has been justified many times over. Originally, there was Don Eigler's 1990 “IBM” paper (whose actual title was “Positioning single atoms with a scanning tunneling microscope.”). It consisted of spelling out the IBM logo using 35 xenon atoms on a substrate of nickel cooled to 4K (likely liquid helium), but the experimental details were sparse. In the few short years following, these two papers

“Atomic emission from a gold-scanning tunneling microscope tip” (https://journals.aps.org/prl/abstract/10.1103/PhysRevLett.65.2418) and “An atomic switch realized with the scanning tunneling microscope” (https://www.nature.com/articles/352600a0) were also written and showed that moving atoms was not a fluke. However, these both relied on STM microscopes rather than AFM. Then, in 2003, a group of Japanese scientists wrote this paper, “Mechanical Vertical Manipulation of Selected Single Atoms by Soft Nanoindentation Using Near Contact Atomic Force Microscopy” (https://journals.aps.org/prl/pdf/10.1103/PhysRevLett.90.176102), which is what I am planning to replicate for my first experiment, where they were able to use a nc-AFM under UHV at 78K to pick up and place down Si atoms on a Si(111)-7x7 surface. This is arguably one of the most important papers in the history of APM, in my opinion, because it showed that Drexler’s version of mechanosynthesis was possible. However, after that, there was a bit of an experimental dark age. The famous “[A] Minimal Toolset for Positional Diamond Mechanosynthesis” (2008) (https://www.molecularassembler.com/Papers/MinToolset.pdf) was released, claiming that diamondoid mechanosynthesis should work, but it was a theoretical study, and no publicly released experiments have directly followed up from this paper.

Around this time, Philip Moriarty (who will be mentioned a lot later) had the idea to run more of these experiments to see if Drexler was right or not, leading to grants from the EPSRC (UK) (https://gow.epsrc.ukri.org/NGBOViewGrant.aspx?GrantRef=EP/G007837/1) to study AFM-based mechanosynthesis. It also led to the Phoenix-Moriarty debate series, which the reader can look up here: Part 1 (http://www.softmachines.org/PDFs/PhoenixMoriartyI.pdf), Part 2 (http://www.softmachines.org/PDFs/PhoenixMoriartyII.pdf), and Part 3 (http://www.softmachines.org/PDFs/PhoenixMoriartyIII.pdf.)

There have been additional proposals to prove the viability of APM (https://www.energy.gov/eere/amo/articles/radical-tool-3d-atomically-precise-sculpting), with one from James K. Gimzewski (who was a co-PI on the Sautet paper we’ll cover later). but that hasn’t shown any progress.

For a bit of a side-quest around this time, (Correction from Jeremy: Leo Gross didn't create qPlus, that was done by Franz Giessibl back in the 90s. Leo did have the first CO imaging experiment though. QPlus is the name of the hardware for that quart z tuning fork df-AFM technique.) in 2009 Leo Gross (https://research.ibm.com/blog/leo-gross-APS-fellow) worked on early experiments with attaching CO molecules to the tip of an AFM, which enabled significantly greater imaging capabilities. This didn’t directly lead to the seminal IBM “A Boy and His Atom” video (https://www.youtube.com/watch?v=oSCX78-8-q0), but I suspect that it wouldn’t have been possible without high-quality AFM resolution in order to image and then position atoms on a 2D surface. For the IBM “A Boy and His Atom” video (not a paper; they didn’t release anything besides a brief, non-technical video), I’m surprised more folks haven’t done these sorts of things. Ah, well, more for me.

In 2014, there was an additional paper that showcased “Vertical atomic manipulation with dynamic atomic-force microscopy without tip change via a multi-step mechanism" (https://www.nature.com/articles/ncomms5476). The “without tip change” portion is less exciting than it seems and is not able to achieve long-term 3D mechanosynthesis, but it was unusually well documented. The problem with this is that they used an unusual surface, which I don’t think is easily repeatable compared to a more standardized Si(111)-7x7 surface.

Now, for making steps towards 3D mechanosynthesis via AFM, these two papers, “A kilobyte rewritable atomic memory” (https://www.nature.com/articles/nnano.2016.131) and “Assigning the absolute configuration of single aliphatic molecules by visual inspection” (https://www.nature.com/articles/s41467-018-04843-z), are interesting. The latter paper is of particular interest because it actually mentions examining diamondoid structures, which is important because without diamondoid, there isn’t really anything that has the stiffness necessary in order to make molecular machines.

In order to come up to where we are today, there are two additional theory papers of interest, with the first being the 2021 paper from Philip Moriarty entitled “Cyclic Single Atom Vertical Manipulation on a Nonmetallic Surface” (https://pubs.acs.org/doi/full/10.1021/acs.jpclett.1c02271) and the 2023 paper “Controlled Vertical Transfer of Individual Au Atoms Using a Surface-Supported Carbon Radical for Atomically Precise Manufacturing” (https://pubs.acs.org/doi/full/10.1021/prechem.3c00011) by Philippe Sautet’s UCLA group. These two papers take different theoretical approaches to the same idea of vertical manipulation using an AFM in order to build 3D structures, but ultimately, I don’t think either is the right approach. The Moriarty paper relies a lot on crashing into the surface we’re studying rather than creating, say, an ALD layer that would make it easier to retrieve feedstock molecules, and the Sautet paper requires a surface-supported carbon radical to make the energy calculations work, which is not very scalable.

That’s basically where we are now, but in order to get to APM, there’s three more conceptual hurdles, I believe, that can serve as good benchmarks besides just 3D structures, which are motors, assemblers, and the first macroscopic object.

You could argue motors aren’t a qualitative leap compared to mechano-synthesizing somewhat small 3D structures, but I think because they deal with electromagnetism and are not static objects (motors move!), they deserve to be their own milestone. They are also (Chapter 11) devices that are not solely in their ground state according to transition state theory, so that is qualitatively different from other mechano-synthesized 3D structures. The state of mechano-synthesized motors currently “does not exist,” and the traditional synthesis of molecular motors, while impressive (https://en.wikipedia.org/wiki/Synthetic_molecular_motor), is not anywhere near a Drexler-level motor in terms of performance.

Assemblers then become significantly more controversial than motors. The design shown in Nanosystems is 4 million atoms, and even then, it is not well understood. It seems unlikely that this arm, which would only be able to place as fast as an SPM, would be a good choice for the first one, but something smaller like a replicative pixel idea (none of which has been fleshed out due to its speculative nature, so take it with a large grain of salt) should be what works. I see the assembler as the pathway between the microscopic and macroscopic worlds, and hence why it’s the fourth conceptual hurdle needed for APM. (Notes from Mark Friedenbach: where is this claim coming from? The limiting factor for SPM pick-and-place speed is the macro-scale piezoelectrics. A nanoscale arm wouldn't be so hindered. Note from Jacob Rintamaki: Furthermore this replicative pixel might look like a hexagon with machinery that would be able to construct more of itself. There's interesting research at the MIT aero/astro department on 3D printers that can print themselves. Could be interesting to look into.

However, if assemblers can be built, then the final conceptual hurdle of making a macroscopic object seems within reach. Some might argue that making a macroscopic object when you already have assemblers seems trivial, but even if it follows from reality, there will always be more difficulty in getting experimental ideas to work than expected. This is an area that, despite Drexler working through the calculations in Chapters 13 and 14 of Nanosystems, I will continue to be quite skeptical of until I see it with my own eyes.

Here’s an additional list of related papers that may be of interest:Atomically Precise Manufacturing of Silicon Electronics

Preface

Drexler’s Big Idea: The end state of materials science, chemistry, microtechnology, and manufacturing is the precise molecular control of complex structures. In other words, molecular nanotechnology.

Drexler’s Guided by Many Readers: General science background, a student interested in a career in nanotechnology, a physicist, a chemist, a molecular biologist, a materials scientist, a mechanical engineer, or a computer scientist.

Interesting Point: If you look at this from just one perspective, it looks wrong. For example, to pure chemists or pure mechanical engineers, molecular nanotechnology looks wrong, but holistically, it works.

Criticism of Criticism: Some critics see bad nanotechnology designs proposed and then use that to discredit the entire field of molecular nanotechnology, among other things. This is a poor mindset from would-be critics.

Good epistemics: Drexler then has a section saying that “will be” vs. “would be”; he’s not doing false advertising about devices that do or don’t exist.

Thoroughness: Drexler explicitly lays out his approach to citations as “Boltzmann isn’t cited, and neither is novel nanomechanical stuff” (paraphrased), but he tries to cite as much as possible.

Apologies: Drexler apologizes for being the theorist in an experimentally heavy field, because that’s the hard work that has yet to come.

Chapter 1: Introduction and Overview

Chapter Goal: It’s just an introduction; nothing unusual here.

A legendary opener: “The following devices and capabilities appear to be both physically possible and practically realizable:

Programmable positioning of reactive molecules with ~0.1 nm precision.

Mechanosynthesis at >10^6 operations/device * second

Mechanosynthetic assembly of 1 kg of objects in <10^4 s

Nanomechanical systems operating at ~10^9 Hz

Logic gates that occupy ~10^-26 m^3 (~10^-8 mu^3)

Logic gates that switch in ~0.1 ns and dissipate <10^-21 J

Computers that perform 10^16 instructions per second per watt

Cooling of cubic-centimeter, ~10^5 W systems at 300K

Compact 10^15 MIPS parallel computing systems

Mechanochemical power conversion at >10^9 W/m^3

Electromechanical power conversion at >10^15 W/m^3

Macroscopic components with tensile strengths >5 x 10^10 GPa

Production systems that can double capital stocks in <10^4 s”

Here’s the context and math to double-check, which will involve the use of future chapters.

Programmable positioning of reactive molecules with ~0.1 nm precision.

Given that mechanosynthesis involves moving things atom by atom, this is essentially a rephrasing of that. This has already been demonstrated in the technical progress portion of this review.

Mechanosynthesis at >10^6 operations/device * second

This is based on being in a vacuum, because while 10^6 operations/device * second sounds fast at first, if this is done atom by atom, then this would imply an assembly frequency of 1 MHz. (From Mark Friedenbach: you later address the "Mechanosynthesis at >10^6 operations/device * second" claim by looking at piezoelectric-controlled SPMs, but that's got nothing to do with nano-scale APM machinery. You say "This would be more constrained for mechanosynthesis-designed AFMs at the nanoscale," which I assume is a typo. Nanoscale AFMs would be less constrained.) Now, resonant frequencies for atomic force microscopes can be in the hundreds of megahertz (https://www.nature.com/articles/s41378-022-00364-4), and thus if every 100 out of the many vibrations per second that are occurring end up being able to pick up, place down, and then adjusting (imaging at the macroscale could theoretically be done with a laser interferometer instead of a CO-functionalized tip once feedback-force curves have been characterized enough, allowing for more breathing room, and I would also like to note that this is a rough approximation), it could be reasonable to do 10^6 operations/device * second. This would be more constrained for mechanosynthesis-designed AFMs at the nanoscale, but it’s not unreasonable. (Note from Jeremey: This is in the Z direction (vertical), X and Y directions tend to be much lower and MEMS AFM with full XYZ dimensions are in the early days. ICSPI and Mohammad Rezi (sp?) have systems like that, but not quite up to snuff on the xyz accuracy necessary for APM yet. Preliminary work with bulk systems like Omicron, RHK, or Unisoku systems is porbably more reliable during phase I bootstrapping.)

Mechanosynthetic assembly of 1 kg of objects in <10^4 s

10^4 seconds = 10,000 seconds, or roughly 2.77 hours. In 14.7, Drexler’s calculations for convergent assembly add up to saying a 1 kg system can build a 1 kg product object in roughly 1 hour, dissipating 1.1 kW of heat. This argument primarily relies on an idea called convergent assembly rather than having one machine place down every single atom (even at rates of 10^6 operations per device * second, that would take 10^18 seconds for a device with 10^24 atoms, which is quite long!). This is one area where I’m actually skeptical about calculations given that we are not anywhere close to convergent assembly, so I’m leaving it in a state of limbo. Much of this argument rests on the idea that we’ve thought of the majority of frictional modes and other such factors that somewhat hinder nanomechanical computing in order to exponentially assemble macroscopic objects.

Nanomechanical systems operating at ~10^9 Hz

In chapter 14 (specifically 14.5.3), Drexler mentions devices operating at around 10^6 Hz and doesn’t seem to mention 10^9 Hz very frequently (although Chapter 10.10.2c mentions diamondoid nano-mechanisms operating for years at >10^9 Hz). However, if we take into account a fast AFM, then this figure doesn’t seem completely unreasonable (although more around 10^8 Hz), but I would rather stick to around 10^6 Hz as that’s what Chapter 14 uses for its convergent assembly math, and that’s less extreme than a billion ticks per second (1 GHz).

Logic gates that occupy ~10^-26 m^3 (~10^-8 mu^3)

Right now, as of 2024, we’re around the 3 nm “node” (semiconductor industry terminology that refers to a level of technology), which has about a 48 nm gate pitch and a 24 nm metal pitch. Roughly modeling a logic gate as a cube with 24 nm sides, that would be a volume of around 1.3824*10^-23 m^3. There’s still room to go with current nodes, so the volume of a logic gate could be ever smaller, but this would represent 1-2 orders of magnitude smaller logic gates. This claim isn’t as wild as Drexler’s other claims, but perhaps it’s wild that Moore’s Law has continued for so long. (FinFET, a pivotal technology, wasn’t even invented until 7 years after Nanosystems!).

Logic gates that switch in ~0.1 ns and dissipate <10^-21 J

Chapter 12 seems to be signal speed propagation of diamond/10 is like 1.7 km/s, and then scaling that down to the 1 um scale is 0.6 seconds. In Chapter 12.3.4d, it’s 0.05 maJ (milliatto-joule, or 10^-21 joules), which means that yes, it dissipates under 10^-21 joules. However, comparing this to modern logic gates, modern logic gates are usually on picosecond (10^-12 second) switching times, so rod logic computers don’t perform as well there, but modern computers are usually 10^-18 joules for switching, which means that rod logic could potentially have an advantage for energy efficiency. Personally, I suspect the main advantage of rod logic computing (Chapter 12) is physical compactness rather than speed or power efficiency, for which nanoelectronics could do quite well. Drexler is just wary of nanoelectronics because they do exhibit quantum effects, which make them non-trivial to model.

Computers that perform 10^16 instructions per second per watt

Chapter 14.12.9 conclusions mentions this, but I don’t see the explicit calculations being carried out anywhere in Nanosystems. Also, this is challenging because ISP/W doesn’t get used a ton nowadays as compared to FLOPs (floating point operations per second) due to the deep learning and GPU revolutions (Nvidia did not exist in 1992!). This would be many orders of magnitude more than current computers, even GPUs, however, and could mainly be attributed to energy efficiency rather than raw computational power, if I had to make a guess. I would leave the verdict on this one as “plausible, but very vague and needs a lot more math.”

Cooling of cubic-centimeter, ~10^5 W systems at 300K

In Chapter 11.5, Drexler directly addresses the cooling of cubic-centimeter, 10^5 W systems at 300K. First, it’s 273K (the melting point of ice) rather than 300K, and there are two interesting points being made. The first is that the design of distinct heat-carrying bodies parallels the use of red blood cells as oxygen carriers in the circulatory system. This is what the best of Drexler looks like to me: looking to biology for inspiration and then using deterministic manufacturing in order to build better systems for various purposes. The second interesting point is that from Heimenz’s 1986 “Principles of Colloid and Surface Chemistry,” an expression derived from the Einstein relationship relates the viscosity of a fluid suspension n, the volume fraction of spherical particles fvol, and the viscosity without the particles n0, as n/no = (1-fvol)^-2.5, and is reasonably accurate for fvol <=0.4. Packaged ice particles with a flow rate of 0.4 increase the fluid viscosity by a factor of 3.6, and if pentane is used as a carrier, the viscosity of the resulting suspensions is 8*10^-4 N*s/m^2. On melting, ice particles at this volume fraction absorb 1.2*10^8 J/m^3 based on coolant volume. Plugging this into the equation, this factor of energy dissipation makes sense, both mathematically and analogously.

Compact 10^15 MIPS parallel computing systems

Should be possible, originally I did a false comparison of MIPS to FLOPS (Floating Point Operation Per Seconds), which Mark Friedenbach pointed out wasn't a good comparison because both are in use and CPU != FPU. The problem with this analysis though is that nanomechanical computing has more frictional modes than Drexler originally calculated, and thus while it would be quite compact and energy efficient when not in use (which is a flaw of MOSFET type systems), it would likely not replace nanoelectronics. However, nanoelectronics could be made significantly smaller than what we currently have.

Mechanochemical power conversion at >10^9 W/m^3

Chapter 14.5.5 points to Chapter 13.3.8. I immediately see problems with this, namely that it involves the reaction 2H2 + O2 -> 2H2O, which is normally explosive (it’s the reaction of LH2 and LOx that rockets—not all, because many use methane instead of LH2 these days, in part because you can create methane in situ electrochemically on Mars). Section 8.5.10: Transition-metal reactions is said to provide justification for this, but I’m not sure I see the logic here. Besides, mechanochemical power would not be worthwhile compared to electromechanical power conversion, so I’m not that mad at this being quite off.

Electromechanical power conversion at >10^15 W/m^3

Chapter 11.7.3: Motor power and power density covers this section, and I am immediately skeptical for many reasons: 1) Drexler makes the assumption of “neglecting resistive and frictional losses," which is not something I would immediately do, and the power density is so large because the volume of the motor is incredible small and the stiffness of the diamondoid structures can support high centrifugal loads. I would shelve this under “not believable” IF viewed at the macroscale, because at the microscale these incredible densities would hold, but the entire amount of solar radiation that the earth intercepts is 10^17 watts, which would imply about 1000m^3 of these motors would be more than the entire sun hitting the Earth, which is absurd.

Macroscopic components with tensile strengths >5 x 10^10 GPa

I don’t like to leave a section like this because I’m assuming there’s something I clearly must be missing, but I haven’t seen this example specifically mentioned anywhere in Nanosystems. Off the top of my head, though, I would suspect that this is wrong (although likely much higher than what exists today) because it relies on continuum models at the nanoscale, which are then convergently assembled into macroscopic objects, thus making some assumptions that we shouldn’t. The strongest argument I could see for high tensile strengths would be that diamondoid is incredibly stiff, and if it (like carbon nanotubes, both carbon, by the way) were grown in long, macroscopic, thin tubes, then it would have an incredible tensile strength.

Production systems that can double capital stocks in <10^4 s

In chapter 14, for the conclusion, Drexler mentions that a 1kg system can build a 1kg product object in about 1 hour, dissipating about 1.1 kW of heat. This would be less than the roughly two and a half hours that 10^4 seconds would imply, and is perhaps the most astonishing thing besides the compact 10^15 MIPS computer that is on this first page of Nanosystems. The mathematics for how this is feasible is written in Chapter 14.4, and it doesn’t seem too unusual with the exception that even if the components operated at their stated frequencies (and then Drexler cuts it by three for a margin of error), that’s still potentially quite efficient. The problem with nanomechanical computing is that as it got studied, more frictional modes were discovered, reducing its efficiency. If this were not possible (doubling capital stocks in roughly an hour), it would likely be because the compounded efficiencies of the motors, gearboxes, sensors, etc. are much worse than Drexler makes them out to be. (Mark Friedenbach notes that the relative inefficiencies of rod logic computing compared to Nanosystem's analyses wouldn't apply to strained-ring motors or gearboxes. I would rebut and say that frictional modes of nanomachines are still poorly understood, and that we need to run more experiments on them since superlubricity is also an unusual phenomena.)

Definition Time! (Because this will be an issue 10 years after Nanosystems is written for Drexler-Smalley and other debates.)

Molecular Manufacturing: “The construction of objects to complex, atomic specifications using sequences of chemical reactions directed by non-biological molecular machinery.”

Molecular Nanotechnology: “...describes the field as a whole.”

Mechanosynthesis: “Mechanically guided chemical synthesis”

Machine-Phase Systems: A system in which all atoms follow controlled trajectories (within a range determined in part by thermal excitation). Machine-phase chemistry describes the chemical behavior of machine-phase systems, in which all potentially reactive moieties (a portion of a molecular structure having some property of interest) follow controlled trajectories.

Important: Don’t confuse these with old fields; they’re new fields.

CONTEXT: In 1992, positional chemical synthesis was at the threshold of realization. Eigler and Schweizer's 1990 paper, “Positioning single atoms with a scanning tunneling microscope,” (https://www.nature.com/articles/344524a0). However, advanced positional chemical synthesis is still in the realm of design and theory.

Table 1.1: Some known issues, problems, and constraints

Thermal excitation

Chapter 5

Thermal and quantum positional uncertainty

Chapter 5

Quantum-mechanical tunneling

Chapter 5

Bond energies, strengths, and stiffness

Chapter 3

Feasible chemical transformations

Chapter 8, but this is scattered around a lot more. I’d still go primarily with Chapter 8 because that’s the mechanosynthesis chapter.

Electric field effects

Chapter 11

Contact electrifications

Chapter 11

Ionizing radiation damage

Chapter 6

Photochemical damage

Chapter 6

Thermomechanical damage

Chapter 6

Stray reactive molecules

Chapter 6

Device operational reliabilities

Chapters 10 and 11 primarily state that a device doesn’t mean a macroscale system.

Device operational lifetimes

Chapters 10 and 11 primarily state that a device doesn’t mean a macroscale system.

Energy dissipation mechanisms

Chapter 7

Inaccuracies in molecular mechanics models

Chapter 3

Limited scope of molecular mechanics models

Chapter 3

Limited scale of accurate quantal calculations

Chapter 3

Inaccuracy of semiempirical models

Chapter 3

Providing ample safety margins for modeling errors

Does this throughout.

Drexler seems to be fine with pointing out errors, with one exception: he assumes that failures happen fast and are easily detectable rather than being slower and more insidious. That was the one criticism Eliezer Yudkowksy made about Nanosystems (https://www.lesswrong.com/posts/5hX44Kuz5No6E6RS9/total-nano-domination), and it does look like a genuine blindspot. Drexler makes this “single point of failure” assumption because, on the atomic and molecular scales, if something goes wrong, it will cause operation failure quite quickly due to the small distances and high frequencies of atomic vibrations. However, as things scale, this doesn’t hold, and while Drexler eventually will mention radiation damage as breaking this “single point of failure” assumption, I don’t think he talks about it enough. To deal with this, we could have solutions for redundancy and monitoring and testing devices over long periods of time. Drexler already builds a lot of redundancy into his designs, so that’s fine. Adding internal sensors and additional forms of monitoring (internal and external) could be useful, but that feels like a further concern at the moment.

Table 1.2 contrasts between solution-phase and mechanosynthetic chemistry (Table 8.1 in Chapter 8 is more detailed).

Comparisons:

Conventional fabrication and mechanical engineering

Similarities:Both have components, systems, controlled motion, and rely on traditional ideas of manufacturing rather than synthesis.

Differences: APM will be much smaller and have to deal with molecular phenomena.

Verdict: Agree with Drexler. At a high level, there are many similarities that we can borrow, but at a low level, these intuitions must be abandoned.

Microfabrication and microtechnology

Similarities: Both are on small scales and have quantum effects.

Differences: Drexler claims that technologies like photolithographic pattern definition, etching, deposition, diffusion, etc.

Verdict: I disagree with Drexler; I think that microfabrication and microtechnology will be significantly more important than he expects. However, this is because in the 30 years since Nanosystems was released, these technologies have rapidly improved and are nearing the atomic scale, although there are major issues and tradeoffs for precision, throughput, reliability, etc.

Solution-Phase chemistry

Similarities: Both rely on molecular structure, processes, and fabrication.

Differences: Drexler focuses on mechanosynthesis and machine-phase systems.

Verdict: Complete opposites, but some principles of solution-phase chemistry could be useful here.

Biochemistry and molecular biology

Similarities: Both have molecular machines (ribosomes in particular are a great model system and are used frequently in Drexler’s rebuttal arguments) and molecular systems.

Differences: biology uses polymeric materials instead of diamondoid materials and works with stochastic, solution-based chemistry rather than the precise machine-phase chemistry Drexler proposes.

Verdict: Ribosomes are an interesting model system, but I don’t think biology is a good proof of existence for machine-phase chemistry since the materials and environment are radically different.

From Drexler: While full quantum electrodynamic (QED) simulations of nanosystems would be ideal, we must make assumptions for computational tractability because that’s how engineering works.

Scope and assumptions:

A narrow range of structures

Author: Very fair.

No nanoelectronic devices

Author: Personally, I dislike this choice a lot.

Machine-phase chemistry

Author: Fair.

Room temperature processes

Author: Enjoy this.

No photochemistry

Author: Good choice can be fickle.

The single-point failure assumption

Author: Controversial, I addressed this previously.

Objectives and non-objectives

Not describing new principles and natural phenomena

Author: Good choice.

Seldom formulating exact physical models

Author: To be fair, computational tractability is an issue.

Seldom describe immediate objectives.

Author: I disagree with this choice, particularly considering how much of a deviation from the traditional chemistry paradigm this is.

Not portraying specific future developments

Author: Fair.

Seldom seek an optimal design in the conventional sense.

Author: Maximizing efficiency is of secondary concern for the purpose of this book over derisking additional areas. Good choice.

Seldom specifying complete detail in complex systems

Author: The author understands why Drexler made this choice, but the author disagrees with it and believes it hurts the perception of nanosystems in the scientific community.

Favoring false-negative over false-positive errors in analysis

Author: Good choice.

Describing technological systems of novel kinds and capabilities

Author: Ok.

Chapter 2: Classical Magnitudes and Scaling Laws

Chapter Goal: “When used with caution, classical continuum models of nanoscale systems can be of substantial value in design and analysis.” (DISAGREE!)

Approximation is inescapable. Why? The most accurate physical models (first-principles quantum mechanics) are computationally intractable. This gets discussed a lot in the next chapter.

Basic assumptions for mechanical systems are: constant stress, which implies scale-independent elastic deformation; scale-independent shape; scale-independent speeds; and constancy of space-time shapes describing the trajectories of moving parts.

Author’s Note: I do not agree with this assumption because size-determining unusual properties is a central idea of modern nanoscience (which is separate from APM).

The choice of diamond as a material is interesting: density (3.5 * 10^3 kg/m^3), Young’s modulus (10^12 N/m^2), low working stress (10^10 N/m^2).

What do these assumptions mean? “Larger parameter values (for speed, acceleration) relative to those characteristic of more familiar engineering methods.” Things move fast at the nanoscale.

Is that intuition good? Yes. Biology is quite fast at the nanoscale (an analogy we’ll use and then not use in various sections of this review). Look at the website “Cell Biology by the Numbers” (http://book.bionumbers.org/) (a Laura Deming favorite) to learn more about this.

Another big idea of this chapter is that “scaling principles indicate that mechanical components can operate at high frequencies, accelerations, and power densities.”

Is this idea right? Yes, for the same reason the biology analogy I brought up in this chapter reviews earlier works. However, how high “high frequencies, accelerations, and power densities” are could be up for debate, since 10^6 Hz and 10^9 Hz are both high frequencies but are quite different things in practice.

Adverse scaling of wear suggests that bearings are a special concern. This is a good point that Drexler brings up, since wear and friction are two areas that commonly get brought up as complaints against nanosystems being possible.

Author’s Note: This is around the moment where I begin to severely disagree with Drexler, since for the next few sections he covers classical mechanical, electromagnetic, and thermal systems (where all of the derivations he makes from classical continuum systems are correct, or at least have their dimensional analysis done right, for which I will not bore you). However, although Drexler does acknowledge that high-frequency quantum electronic devices will be extremely dissimilar to classical continuum models, I don’t think he mentions how much continuum models break down at the nanoscale. For example, he does mention that our macroscopic understanding of heat breaks down on the atomic level and that it works more like energy being transferred ballistically by phonons, for which the mean free path, in the absence of bounding surfaces, would exceed the dimensions of the structure in question. (This is also a concern when designing UHV chambers, because the mean free path for the handful of gas molecules inside can be many kilometers long or even more.) but then the conclusions feel uncertain to me. In particular, there’s a Table (2.1) that Drexler includes in this chapter, and I really dislike it because one of its main metrics is whether a physical quantity has a “good, moderate to poor, moderate to inapplicable, bad, good at small scales, or definitional” rating, and he doesn’t explain what those mean or how he assigned them beyond what appears to be "vibes.”.

Further chapters consider more limits and edge cases. In particular, AC electrical circuits are different from these models. (They would be different because the failure modes for complex electromagnetic systems can be astounding: short circuiting, overloads, arc faults, insulation breakdown (which will be an issue when in Chapter 11 we discuss motor designs), harmonic distortion, voltage spikes or surges, etc.)

Author’s Note: This is good, but I would almost say to scrap any continuum model used at the nanoscale, even if it dramatically increases the computational cost of our models.

Conclusions: Scaling laws are bad for electromagnetic systems with small calculated time constants, reasonably good for thermal systems and slow-varying electromagnetic systems, and often excellent for mechanical systems as long as the component dimensions substantially exceed atomic dimensions. Scaling principles indicate that mechanical components can operate at high frequencies, accelerations, and power densities. The adverse scaling of wear lifetimes suggests that bearings are of special concern.

Chapter 3: Potential Energy Surfaces

Chapter Goal: This chapter talks about potential energy surfaces, which are fundamental to practical models of molecular structures and dynamics.

Author’s Note: This chapter (and chapters 4–7, which cover various molecular dynamics methods and their implications) have made arguments of AI doom that rely on an AI system that’s able to “magically solve these problems computationally” seem significantly weaker to me. It’s not that I don’t think AI models for molecular systems could get significantly better (they could and they will), but for how many parts a macroscale nanodevice is made out of, this will require some serious experimentation in the loop and feedback testing. In the George Hotz vs. Eliezer Yudkowsky Youtube Debate (https://www.youtube.com/watch?v=6yQEA18C-XI), Eliezer takes the position that this is somewhat like a special case of the protein folding problem (bootstrapping APM) and makes an interesting point that it could maybe rely on the past corpus of experiments. However, due to how many approximations we have to make for our calculations, there is a high level of empiricism required for developing APM (computations are for invalidation rather than validation, and drug/material screening looks a lot like “a weak computation screens out 99%, the remaining 1% goes through a more rigorous computation that screens out 99% of that 1%, and then the remaining 0.01% has experiments run on it to find promising compounds) that Hotz seems to correctly understand.

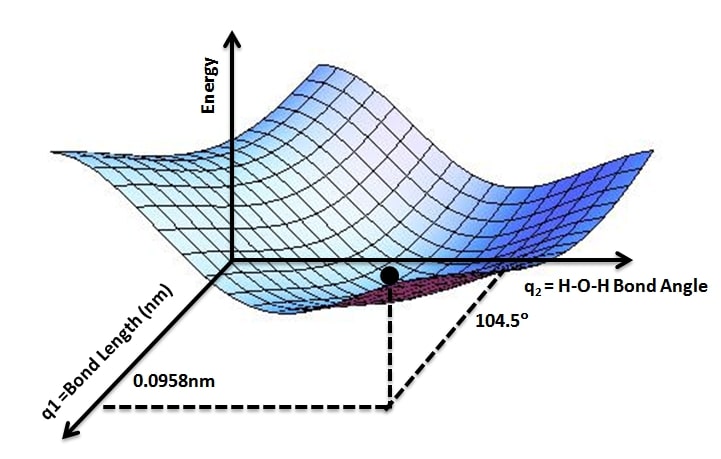

DEFINITION: Molecular potential energy surfaces, which “...stem from a visualization in which a potential energy function in N dimensions (that is, in configuration space) is described as a surface in N + 1 dimensions, with energy corresponding to height. (When N>2, the visualization is necessarily nonvisual.)”

Visualization: significant features of a PES are its potential wells and the passes (cols) between them. “A stiff, stable structure resides in a well with steep walls and no low, accessible cols leading to alternative wells. A point representing the initial state of a chemically reactive structure, in contrast, resides in a well linked to another well by a col of accessible height. A point representing a mobile nanomechanical component commonly moves in a well with a long, level floor.”

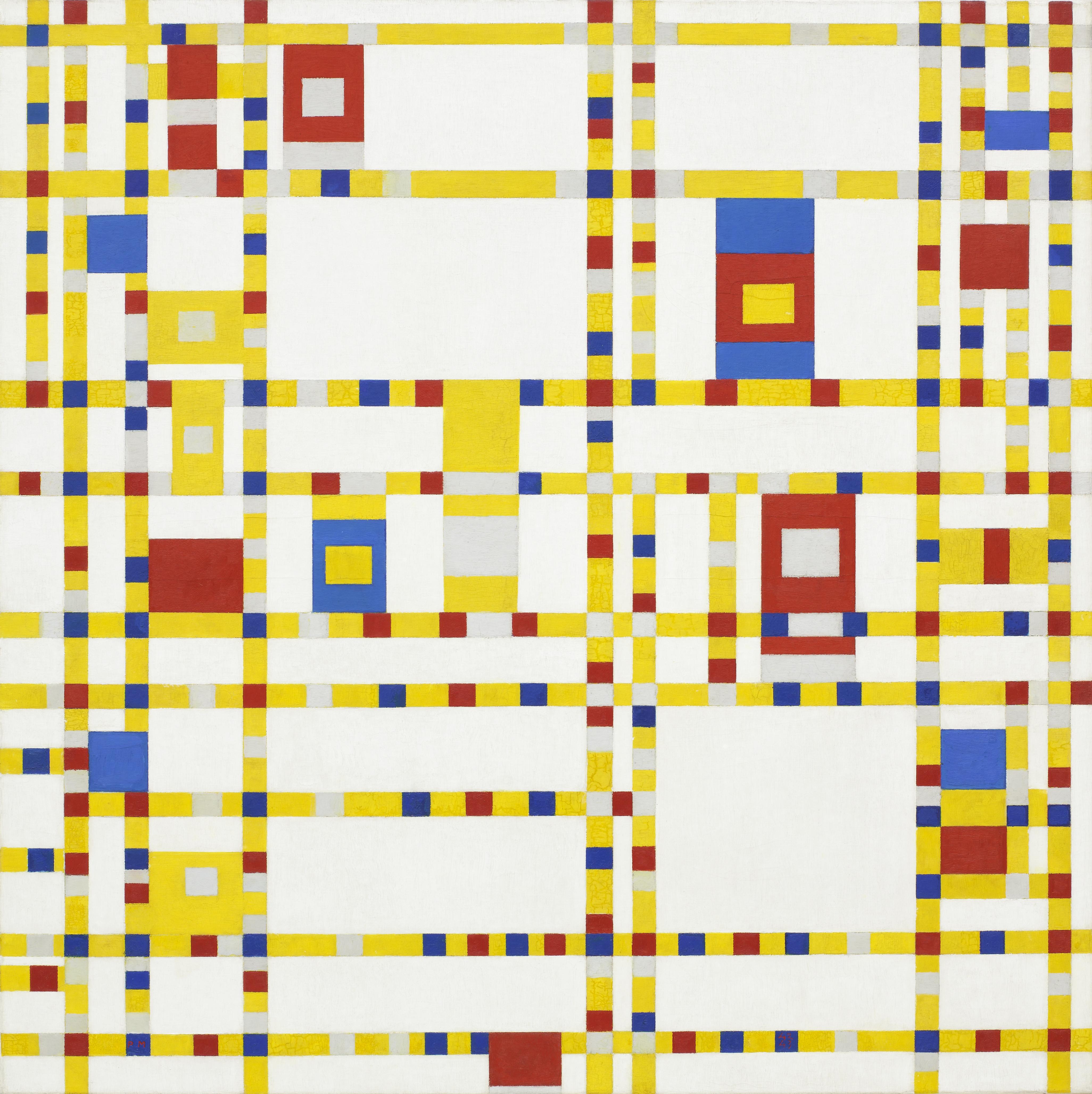

Potential Energy Surface for Water

HUGELY UNDERRATED POINT: Drexler makes an analogy saying that “molecular systems in which all transitions occur between distinct potential wells resemble transistor systems in which all transitions occur between distinct logic states: in both instances, the application of correct design principles can yield reliable behavior even though other systems subject to the same physical principles behave erratically.”

I believe this analogy is a mostly valid one to make, but it is quite load-bearing: if we weren’t able to rely on these distinct transitions that were analogous to logic states, then all of this would fall apart.

BIG CONCEPT: time vs. accuracy trade-off for simulations. You can have fast simulations or accurate simulations, but not fast, accurate simulations.

Drexler doesn’t directly use wave equations or the mathematics of quantum mechanics here; that’s too low a level for the work we want to do. The goal is to think more like a chemist or materials scientist than a particle physicist.

Quantum electrodynamics (QED) is a very accurate theory of electromagnetic fields and electrons that would be lovely to use if it weren’t so intractable for relevant systems.

Schrodinger’s equation is also intractable for relevant systems.

Why? Imagine you want to model a 1-D differential equation. If you put down 10 points to approximate it, your approximation will be pretty good. If you want to model a single atom in 3D space, you’ll need 10 points for each of the cartesian X, Y, and Z dimensions to model it, so it’ll be 10^3 points to consider. Now to model 300 atoms, you’ll need 10^(3 dimensions * 300 atoms) = 10^900 points to model the system, which is too much for any computer we have.

Relativistic effects should be considered, but for valence electrons (especially for lighter elements), they're chemically negligible. Mostly. In 8.4.4b, Drexler discusses a case where that might not be the case, where relativistic effects in heavy atoms can cause strong spin-orbit coupling.

Relevant: For chemistry and nanomechanical engineering, the full wave function gives more information than is necessary.

Born-Oppenheimer Approximation: Treats the motion of electrons and nuclei separately. Nuclei are around 2,000 times bigger than electrons. If momentum = mass * velocity, to get the same velocity as a similar-moving electron, a nucleus would need 2,000 times the momentum. Thus, we can treat the nuclei as fixed compared to the electrons.

Where does the Born-Oppenheimer approximation break down? When nuclear motions are fast (nuclear bombs), when excited states occur at very low energies (rare in stable molecules), and when small changes in nuclear coordinates cause large changes in the electron wave function (also rare).

Potentially controversial statement: “In most nanomechanical systems, as in most chemical reactions, electron wave functions change smoothly with changes in molecular geometry.”

Verdict: Not very controversial, but be careful for (literal) edge cases.

Practical calculations require further approximations.

The most popular approaches are known as molecular orbital methods.

Independent-electron approximation: Treats each electron in a molecule as moving in an averaged field created by the other electrons, simplifying calculations.

What’s the catch? This approximation neglects electron correlation, where the movement of one electron is influenced by the instantaneous position of others, potentially leading to significant errors in the description of electron behavior, especially in systems where electron-electron interactions are strong.

Approximate wave functions:Approximate wave functions are used to solve the Schrödinger equation when exact solutions are not possible, using methods like Hartree-Fock to estimate electron distributions.

What’s the catch? These approximations can fail to account for all electron correlations and might not accurately represent the true nature of the electron density, especially in excited states or systems with close-lying energy levels.

Configuration Interaction (CI): improves upon the Hartree-Fock approximation by considering a superposition of multiple electron configurations, accounting for electron correlation more accurately.

What’s the catch? CI can become computationally very demanding with increasing system size because the number of possible electron configurations grows exponentially, often making it impractical for large systems.

Semiempirical methods (ab initio): use approximations and empirical parameters to simplify calculations, trading some accuracy for computational efficiency.

What’s the catch? The empirical parameters may not transfer well between different systems or states, and the approximations can limit the method's predictive power, making these methods less reliable for systems outside the range for which the parameters were fitted.

However, due to concerns about the expense, accuracy, and scalability of these methods, we can also look into molecular mechanics methods, which use molecular geometry instead of electronic structures for energy calculations.

Fun fact: organic chemists represent molecular structures with ball-and-stick models: each ball is an atom, and each stick is a bond.

For small molecules, the computational cost favors molecular mechanics over ab initio methods by a factor of 10^3. For larger systems, it can be even larger because molecular mechanics methods scale by the second or third power of the number of atoms (O(N^2) or O(N^3), ignoring some constant factor) rather than ab initio methods, which may be in the fourth or higher regimes (O(N^4)).

A limitation of molecular mechanics is that it works best when the systems are not too far from equilibrium. I don’t think it will be much of an issue for, say, a solid block of atoms (that’s essentially just in one energetic state), but for modeling motors or moving objects, then molecular mechanics would likely not be a good choice.

Thus, computations that aren’t essentially QED should be used more for invalidating certain designs than validating them. A simulation could tell you, “This won’t work," but only an experiment will tell you, “This will.”

The MM2 model The current most-used force fields are either MM4 or are learned through machine learning models trained on quantum-chemical DFT data. (I'll show how MM2 and MM4 are different here. Also, note from Mark Friedenbach: “Re MM2 vs MM4 -- almost nobody uses either of these codes today. A derivation of the MM codes is used by Merck in their pharmaceutical research. But largely there is a slew of force fields for special use cases. The most widely used hydrocarbon force field is probably ReaxFF.”)

Bond stretching:

MM2 uses a simple harmonic oscillator model for bond stretching, considering the bond lengths and their deviations from equilibrium values.

MM4 introduces anharmonic terms to better represent the potential energy associated with bond stretching, especially at larger deviations from the equilibrium bond length.

Bond angle bending

MM2 employs a quadratic function for angle bending, treating deviations from the equilibrium bond angle as a simple harmonic motion.

MM4 incorporates more sophisticated functions, including anharmonic terms, to account for the energy changes associated with bond angle bending more accurately, especially for large deviations.

Bond Torsion

MM2: Models torsional interactions using a Fourier series or cosine functions to represent the energy variations with rotational angles of bond torsions.

MM4 enhances the description of torsional potentials, adding terms to better capture the complexities of molecular conformational changes and stereoelectronic effects.

Electrostatic Interactions

MM2 calculates electrostatic interactions using Coulomb’s law, often with fixed partial charges on atoms.

MM4: May include more advanced treatments of electrostatics, considering charge transfer, polarization, and possibly an improved charge distribution model.

Nonbonded Interactions

MM2: Accounts for van der Waals forces using Lennard-Jones potential or similar functions.

MM4 includes explicit treatment of hydrogen bonding and improved van der Waals parameters.

Complications and conjugated systems

MM2: It doesn’t really capture some of the unusual subtleties of conjugated and aromatic systems or the effects of electron delocalization.

MM4: Improved handling of conjugated systems, electron delocalization, and aromaticity, providing better energy and geometry predictions for complex organic molecules.

So, overall, does the MM2 to MM4 switch change anything? It's hard to say, but I don’t think so. These simulations are still tools for disproving designs rather than proving designs. We need to run more experiments!

Drexler also mentions additional terms that could be added to bonds under large tensile loads and nonbonded interactions under large compressive loads, but I believe that these are covered better in MM4, and ultimately, I am still skeptical of any computational model for such complex systems.

(New Section) Potentials for chemical reactions

Relationship to other methods: Molecular mechanics methods are based on the idea of structures with well-defined bonds; they cannot describe transformations that make or break bonds and cannot predict chemical instabilities. You could use CI methods to try and model bond cleavage and formation, but many papers also just rely on approximate potential surfaces.

Bond cleavage and radical coupling: There’s not much interesting content here, just the basics: a simple reaction can be the bond cleavage of a dimer or a two-atom system that splits it into two radicals, but to combine two radicals into one, they would need paired, antiparallel electron spins to do bond formation. This doesn’t affect Drexler’s work very much; I’m not sure why he included this, to be honest.

Abstraction reactions: Abstraction reactions are ones where reactions make and form bonds simultaneously, such as in the symmetrical hydrogen abstraction reaction: H3C + HCH3 -> H3CH + CH3. This doesn’t immediately relate to Drexler’s designs either, but I want to include as much information as I can.

Continuum representation of surfaces: Van der Waals attractions between nanometer-scale objects can be substantial. (True!) Honestly, I think that using the Hamaker constant, which Drexler does, is typically used for continuum surfaces.

Transverse-continuum models of surfaces: For non-metallic surfaces (which would undergo bonding rather than repulsion), we need different models for determining how two sliding, unreactive, atomically precise surfaces interact with each other. Drexler claims that if we neglect elastic deformation resulting from interfacial forces, it results in a surface-surface potential that could roughly look like “two planes rubbing against each other.” This is incorrect, as the surfaces on the atomic level would be much too small (although quite smooth enough) in order to make the assumption that we can neglect elastic deformation. What this implies is that we’ll need more computational power, obviously, but what designs now become unfeasible is uncertain. 3D bulk mechanosynthesis and motors should be relatively untouched, but rod logic computing and other methods that rely on surfaces sliding past each other should be looked at more carefully. There is the phenomenon of superlubricity (very low frictions at the atomic scale). Don’t think of superconductivity or superfluidity; the friction still exists; it’s just very small (like <0.01 for its kinetic coefficient of friction), which was theorized in Nanosystems and was experimentally shown to exist in 2004.. That phenomenon could prove Drexler’s designs right, but I suspect that only the experiments will show how things really play out.

Molecular models and bounded continuum models: For irregular surfaces, you really should be modeling at the level of interatomic potentials, but for bulk surfaces, Drexler suggests that one could try using bulk continuum models. This is developed more in Chapter 9, but I’m skeptical because these sorts of systems are so far out of the way of what exists even in nature (they’re much better than ribosomes, so don’t make that analogy), so I would just try to model all of the interatomic potentials if possible.

Conclusions: The potential energy surface of a ground-state system can determine its mechanical properties, but (author’s note) I think that PES obviously isn’t enough to map everything out. For starters, everything is assumed to be stable, all transition states are mapped out, there are many approximations made, and many different surfaces may be needed for non-adiabatic events, but despite that, it’s a good starting point. Drexler does address some of these concerns later on (mostly the transition states and everything assumed to be stable), but I still have mixed feelings about using potential energy surfaces.

Further reading: Drexler suggests additional resources for reading up on molecular quantum mechanics and potential energy surfaces, but honestly, I don’t think that will solve the problems inherent with trying to use computers to model these nanomechanical systems: in the end, you have to run the experiments. Working nanotechnology is still very much an empirical rather than a purely computational or theoretical discipline.

Volume 2: Back From The Desert

Chapter 4: Molecular Dynamics

Chapter Goal: This chapter is just an overview of Molecular Dynamics, which will be fundamental for chapters 5 through 8.

The chapter starts out by talking about non statistical mechanics methods.

Infrared spectroscopy studies molecular vibrations. Small displacements of stiff structure = nearly linear restoring forces.

Controversial point: “This permits the use of a harmonic approximation, in which the vibrational dynamics can be separated into a set of independent normal modes and the total motion of the system can be treated as a linear superposition of normal-mode displacements. This common approximation is of considerable use in describing nanomechanical systems.”

Does this make sense to use? Yes, actually, it’s commonly used in vibrational spectroscopy and while it is an approximation it does make sense to use here.

Additionally, since both classical and quantum mechanics permit exact solutions for the harmonic oscillator, the time evolution of systems can readily be calculated in the harmonic approximation.

Drexler then discusses molecular beam experiments, which I believe aren’t that important to cover (he doesn’t mention them as much), but he does mention something quite interesting: ”In these respects, reactions in nanomechanical systems resemble the solution-phase reactions familiar to chemists. In such systems, the chief concern is with overall reaction rates, not with the details of trajectories. As a consequence, the detailed shape of the PES…is of reduced importance.”

My thoughts are that this makes sense, and should be fine.

Drexler then talks about generalized trajectories. I think this section isn’t super relevant in either a positive or a negative way, but I am going to disagree that molecular mechanics methods are suitable for describing the short-term dynamics of nanomechanical components, since although he does acknowledge that you’ll need quantum corrections (section 4.2.3b) of Nanosystems) and that motions driven by non-thermal energy sources are often negligible (correct), I don’t think these models would be accurate without experiments to inform them.

Statistical Mechanics:What I find interesting, and very nuanced from drexler, is that there is a level of inherent probabilistic-ness to the nanoscale world, but that “the nanomechanical engineer’s tasks, then, is to devise systems in which all probable behaviors (or all but exceedingly improbable behaviors) are compatible with successful systems operation. This typically requires designs that present low energy barriers to desired behaviors, and high energy barriers to undesired behaviors, in which the high energy barriers often are a consequence of the use of stiff components.”

4.3.2a) is essentially just the fundamentals of statistical mechanics being repeated, standard textbook definitions. Nothing unusual here.

However in 4.3.2b) there’s this interesting idea of “in nanomechanical design, a common concern is the probability that a thermally excited system will be found in a particular configuration at a particular time.”

This then gets expounded in chapter 5 where the positional PDFs (Probability Density Functions) of various elementary nanomechanical systems, comparing the results of quantum and classical models, shows the limits of where classical statistical mechanics could be applied to nanomechanical systems. I think this is a very nuanced point from Drexler about where this tool (classical statistical mechanics) could and could not be applied.

Drexler then talks about how configuration-space could be a useful visualization for understanding potential energy surfaces but honestly that doesn’t address any physical questions about whether the nanomachines will work or not. Where this tangent gets interesting is when in section 4.3.3b) Drexler states that “Minima separated by [potential energy surface] barriers small compared to kT can often be regarded as a single minimum, because the barriers are crossed so easily.” First, I’m skeptical of this claim, but second, let’s do the math. kT refers to kB, the boltzmann constant, multiplied by T, temperature (kelvin, K). It’s used as a scale factor for energy for molecular scale systems, since it’s how much heat is required to increase the thermodynamic entropy of a system by kB. For minima less than kT (which is approximately 4.11 * 10^-21 J), that would be a fair assumption for drexler to make, although I dislike the word “small” because it should be much more rigorous here. Drexler’s excessive use of potential energy surfaces are already somewhat controversial to synthetic organic chemists I have talked to, and any hand-waving will only agitate them more. To quantify, I would say that if it’s a minimum of 1 order of magnitude (OOM) smaller than kT, then assuming it’s a single minimum should be fine, just to start putting some quantitative numbers on this.

Section 4.3.4: Equilibrium vs Nonequilibrium Processes:Honestly this was a lot of textbook definitions and the gist is that “when the deviations are small, equilibrium statistical mechanics works as a physical model” which I agree with, and deviations are small depends on whether it’s a hundred atom nanosystem or a much larger system, but for larger nanosystems a large amount of power dissipation would be required for larger thermal fluctuations, which gets covered more in Chapter 6 or 7 I believe. Overall, this feels fine, I don’t think that the modeling here is going to be a limiting factor, I would be more worried about frictional modes.

Section 4.3.5: Entropy and Information: I don’t think there was very much unusual content here except for that Drexler did mention thermodynamically reversible computing (that is, the dissipation of free energy per logically reversible operation can be made arbitrarily close to zero). Michael P. Frank of Sandia National Labs is an expert on this type of computing, and would be someone to discuss more about this, but reversible computing is an interesting paradigm and application of nanomechanical computing because it would allow for many OOM more computation per joule than we could do today, except it would take longer to get those crazy high efficiencies. Reversible computing is out of scope for this review, but you can learn more here.

Section 4.4.3c: Drexler has this quote where he mentions that ”mechanosynthesis is relatively insensitive to errors.” I found this controversial at first but not that bad after thinking about it because at very small scales you can quickly reverse a mistake that’s an atom big. However, this is something that I would be cautious about saying before experiments have been run. Drexler also mentioned that you could force a reaction to happen (which is possible, happened with superconductivity in substances under high pressures), and in Section 4.4.3d) also said that nanomechanical designs could be eventually tested and corrected. I think that the last part is very nuanced and good for Drexler, because I strongly suspect most of his work needs to be corrected.

Conclusion: There’s nothing unusual written here but there was an interesting statement that Drexler made. “A consideration of PES accuracy requirements in light of dynamical principles and nanomechanical concerns shows that the mechanical stiffness of diamondoid structures and mechanochemical devices often greatly reduces their sensitivity to PES modeling errors, relative to more familiar molecular systems.”

Does this make sense? Yes, I think it would. Having a high mechanical stiffness means that you can have more deterministic-esque behavior out of a molecular system (there will always be some level of positional uncertainty, as the next chapter will cover) but diamondoid is an unusually stiff substance, so it is not an unusual proposition.

Further Reading: Molecular Dynamics is a broad field. Drexler recommends some other books, mainly on MD of biomolecules, but this isn’t super relevant.

Chapter 5: Positional Uncertainty

Chapter Goal: This chapter covers the concept of positional uncertainty in nanomechanical design, which is a fundamental problem for nanomechanical systems due to both quantum and thermal positional uncertainty. However, thermal motion is almost always more important at room temperature. (This is an ongoing theme, where in other chapters we’ll discuss how frictional effects are much more serious than electron tunneling and such.)

Section 5.3:Drexler states that “many parts of nanomechanical systems are adequately approximated by linear models, in which restoring forces are proportional to displacements. The prototype of such systems is the harmonic oscillator, consisting of a single mass with a single degree of freedom subject to a linear restoring force.”

I agree with this, but dislike how Drexler uses the word “adequately”. That word should be replaced by more quantitative bounds. However, harmonic oscillators are common in physics and so I will give this one to him, even though I disagree with bounded continuum models for nanomechanical systems.

Section 5.4:The big idea here is that “various molecular structures resemble rods”. Drexler uses the examples of DNA helics, microtubules, and ladder polymers, among others. He then gives some additional nuance by mentioning that these rods can be approximated by first going a classical model for a continuous elastic rod, then an exact quantum mechanical model for a rod consisting of a series of identical masses and springs (with an approximation for this). I think this is actually fine and that there really are molecular biological analogies that can be fairly made for rods. Now, the problem, which we’ll address in future sections, is that rod logic has many frictional modes which Drexler didn’t anticipate, so in Chapter 12 when we discuss mechanical nanocomputers, they won’t scale to the sizes of rooms unfortunately.

Section 5.5 deals with the elastic bending of thermally excited rods. Frankly, the math is fine (there are no arithmetic mistakes, and it’s just relatively simple calculus), but what I’m interested in are any unusual assumptions or conclusions this section makes. The three that stood out to me are that first, in section 5.5.1b) Drexler states “To treat objects of such low aspect-ratio as rods is, at best, a crude approximation” in reference to ball and stick models. I agree with this. In the 5.5.3 section Drexler makes an approximation for the positional variance of a rod that he says is “always high, but never by more than 1%.” I was skeptical of this claim at first but then saw in Figure 5.12 it shows that the calculations have been run for the dimensionless transverse variance for rods, neglecting shear compliance. Where I would be skeptical is more of whether this approach, an exact semi-continuum sum (N=infinity) is correct at all. I don’t have a good answer for this, other than that more experiments need to be run in order to inform the theory. Finally, the last interesting point in this section is that Dreler states that “quantum effects on positional uncertainty are minor for rods of nanometer or greater size.” I would happen to agree, and it’s why for future scientific efforts studying frictional effects rather than electron tunneling or other quantum effects is of significantly greater importance.

Section 5.6: Piston Displacement in a gas-filled cylinder: Great point from Drexler (which agrees with my understanding): “Earlier sections have considered linear, elastic systems in which the motion can be divided into normal modes, treated as independent harmonic oscillators. A different approach is necessary for nonlinear systems in which the displacement of one component affects the range of motion possible to another, that is, for entropic springs.” Now, I would say that this kind of analysis needs to be applied to other systems he mentioned, since linearity and elasticity is not something I would blindly assume for many nanomechanical systems, but let’s move on now.

Section 5.7: Longitudinal variance from transverse deformation: There was nothing weird in this section. The general gist was that transverse vibrations in a rod cause longitudinal shortening by forcing it to deviate from a straight line. The further analysis of this longitudinal-transverse coupling isn’t used in Nanosystems (although Drexler claims it is in “other nanomechanical systems”) so really this combined with no unusual math or assumptions makes it fine to ignore.

Core Idea for section 5.8: Elasticity, entropy, and vibrational modes: At room temperatures, objects made of materials as stiff and light as diamond have positional uncertainties dominated by classical effects (not quantum) so long as dimensions exceed one nanometer. Yes, I agree with this, but the problem is that we still don’t fully understand heat transport and frictional effects as the nanoscale either. Also, superlubricity technically would be a classical effect (it doesn’t rely on quantum-mechanical phenomena like superconductivity), but is still not very well understood despite being classical.

Conclusion: Positional Uncertainty isn’t the big problem or thing that’s interesting about nanomechanical systems. Friction is what you should be worrying about.

What Other Techniques Besides AFMs Are Interesting?

Hydrogen Depassivation Lithography (HDL): Zyvex has been doing this and while it’s gotten better I don’t see it as a real competitive thing. It’s still interesting though since it has experimental results, but I wouldn’t bet money on it.

MEMs: I don’t think that this is the move. Yes, Feynman had the idea of the machines make the machines that make the tiny machines which modeled how the industrial revolution worked because the machines made the tiny machines made the tiny machine, but yeah it feels like a mediocre strategy. MEMs also tend not to work from the principles of nanomachines (which molecular biology does, kind of), so I don’t think that they’re a good analogy to reason from. They’re a local optima, we can do better.

Optical Tweezers: My verdict is that this is a great technology for scaling quibits but not for anything else. Despite the throwing atoms paper in 2022 and the 2024 Caltech 6100 atoms paper and the cool science (literally, although it’s ultracold) of optical tweezer arrays (which are kind of like egg cartons of light that atoms can slide into) which you can read about here, I don’t see it as a good technology for APM. It doesn’t have the massive industrial scaling of beam methods and isn’t as precise for manipulating atoms as AFMs are, and nanophotonics/plasmonics are, well, very much open scientific questions. This technology is fascinating though and should be pursued further, just not necessarily for the purposes of APM.

Synthetic biology/Protein Blocks/DNA Nanotechnology/Spiroligomers: First, I acknowledge that this is “lumping together” many things and that some folks are justifiably going to be mad at me for this. I would secondly like to note that I hope all research in these areas continues, as they are fascinating and will likely have much benefit for humanity, especially since biologists and other scientists (such as physicists) need to talk a lot more to each other. However, I don’t feel as though these technologies are a meaningful step towards high throughput atomically precise manufacturing, they feel more like a local optima that sounds smart but isn’t the correct pathway. I hope someone can convince me otherwise, but if one was not using nc-AFMs, I would instead recommend the following: